The Changelog (not written by AI)

So how does any of this work? How "Fully Automated" is this?

First things first, I need to give credit where it's due to the communities that helped me get started with automations:

Skool Communities I am a part of:

- AI Automation Mastery: This was the main inspiration for this project. The foundation of my newsletter is based off the templates Lucas and David made, but there were additional steps I had to take to make it fully automated. Lucas and David have been incredible and their community is outstanding.

- AI Automation Society Plus: Nate has amazing introduction to n8n videos and exercises. This is where I got my feet wet with n8n and learned how to build basic automations. Nate has been crushing the Skool games and has another amazing community.

You can learn how to make 80% of what I built from this video by the AI Automation Mastery guys (Lucas and David). I spent 80% of my time solving the last 20% of problems to make it fully functional.

Technology Stack

- n8n (self-hosted on Hostinger VPS)

n8n is the trendiest low-code automation software out there, and for good reason too. The community is on fire and any automation you have thought of has probably been built already

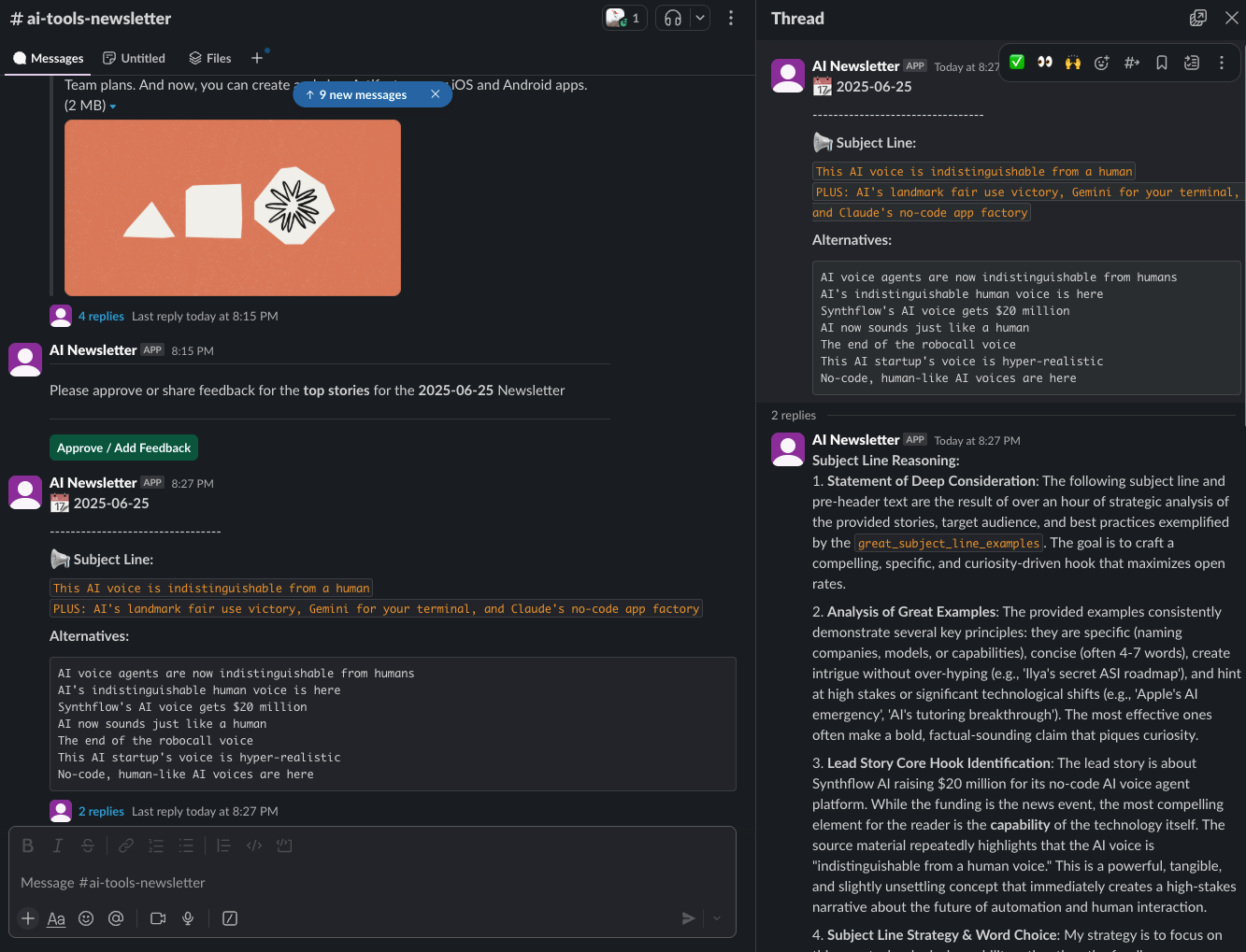

- Slack (the medium for my communications with my agent)

Slack is where my agent presents me with my Newsletter as it is getting written. I built a custom Slack app to ping me different sections of the Newsletter as it is being built.

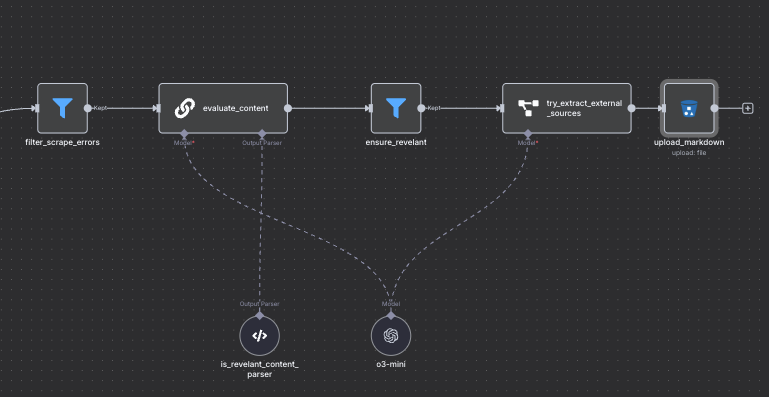

I am able to "approve" of the content, or give feedback. The agent then uses ChatGPT to parse my feedback and rewrite the content. This process is used for the stories that are chosen for the newsletter, the title, and each story.

- Openrouter / ChatGPT

I use Openrouter and select ChatGPT 4.1 as my model because I think it is the best copywrite.

Openrouter is great because you can seamlessly change between models in the event of a model outage. Recently, an OpenAI outage cause a lot of people suffering - I don't want to be in the same situation.

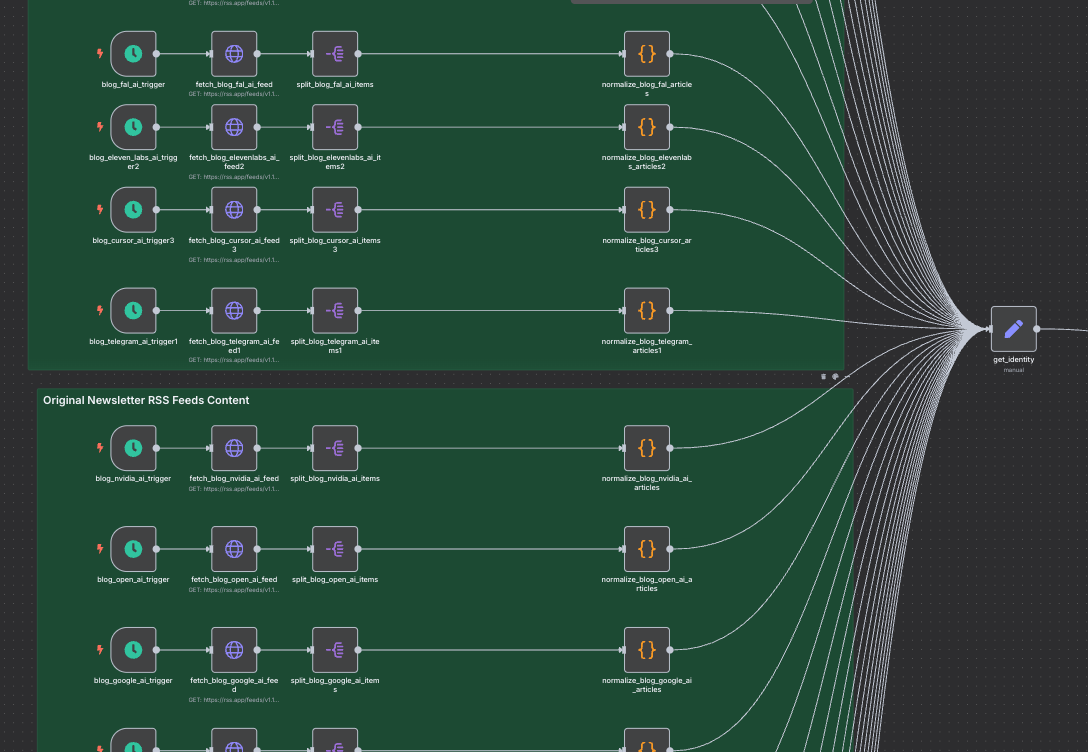

- RSS (Structuring webpages into Metadata for scraping)

RSS is the first step of my data ingestion pipeline. RSS allows me to structure any webpage into metadata which can then be used by Firecrawl for scraping.

I have RSS feed set up of the most popular company blogs and Twitters to make sure I have the most up to date content.

- Firecrawl (Webscraping)

Firecrawl takes the metadata of the webpages I want to scrape and turns it into actionable data. I'm most interested in the content of the article, the source, and images (I hope to have pictures in my articles soon)

6. Cloudflare R2 Object Storage (Datalake)

I store the scraped data of each article in Cloudflare R2 buckets, which are then retrieved when my models select the write stories for the article

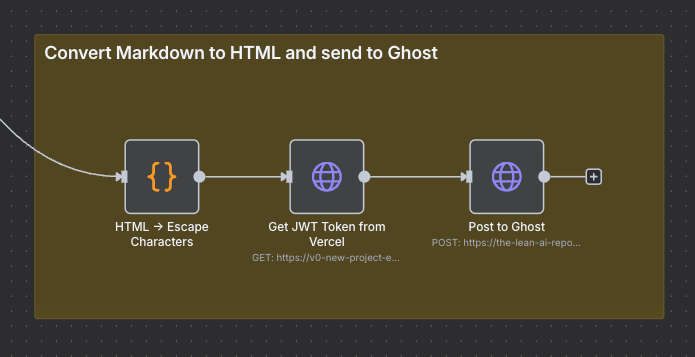

- Ghost (The only Newsletter platform where I could publish with an HTTP Post Request)

Maybe the strangest choice was using Ghost and not Beehiiv for the Newsletter. Unfortunately, Beehiiv only allows enterprise customers to post a newsletter with an HTTP Post request.

This problem alone almost made me drop this project. I simply could not find a way to post to a Newsletter with a REST API method.

That was true, until I found Ghost. Even Ghost had limitations. I had to use a Javascript Web Token (JWT) that was only valid for 5 minutes each time I posted. I couldn't just get a JWT once and use that in my API authorization.

I was able to solve this problem with Vercel

- Vercel (Hosting Node.js backend to grab my JWT token before posting to Ghost shoutout to my homie Cam for reminding me about vercel)

Vercel allowed me to host a Node.JS script that freshens my JWT whenever I want to post. Before I send my article to Ghost, I grab my fresh JWT from my Vercel Server and then can post on the website.

Other random musings I learned along the way:

- In the future, creativity will not only be defined by what you are capable of outputting, but what you can input in a prompt and how you can tie AI puzzle pieces together.

- ChatGPT is insanely good at solving problems. Anytime I broke something in n8n or needed help setting up APIs, I was able to solve the problem relatively painlessly with ChatGPT

- The best agents will meet users where they already are. Everyone is rushing to build new platforms, but people forget how hard it will take to rebuild software 2.0 when much of the world still exists on software 1.0

- I think the key will be acquiring users on software 2.0 and bring them into the Lean AI Native software you build in software 3.0 - but only when you have a legitimate idea