The Changelog v1 - We have Twitter now & "Signal vs. Noise" (update from Spencer)

TL;DR (too long; didn't read)

- Thanks for signing up - means a lot

- I am now scraping Twitter/X and storing it in my data lake.

- My next goals are to:

- Automate cross-posting on Linked.In, X, Instagram, Threads, Tiktok, and Youtube (including short-form video content)

- Automate responses in my DMs

- I will work on my content machine: The Lean AI Report until it is mostly self-sufficient (minimal human in the loop).

- I hope by the end of July I can focus on building a product and will share those updates here.

- Expect these updates when I have something to say. I won't be bothered if you don't read - this is a nice brain dump/reflection for me.

Have a great week everyone!

I'll make these posts when there are meaningful changes to the stack that operates The Lean AI Report and share learnings as well. I might sneak the occasional tidbit from my personal life too.

First and foremost if you are reading this, then you gave at least one s#*t about what I have to say and signed up for this newsletter which genuinely means more than I can put into words. I was honestly surprised we got to 20 subscriptions, so expect more posts on Linked.In (sorry not sorry). I plan on being extra shameless now...

Automation Upgrades

Since I launched this past week - I've made two major changes to how the Newsletter Automation works:

- The Twitter/X Scraper

One of the most important pieces for this project is going to be learning how to track trends and meaningful updates on Twitter (I go back and forth between Twitter and X).

In the past month, I've learned how relevant X is in the tech world. This is where everyone shouts into the digital void. Some voices are louder than others, but most companies have some presence there - especially tech companies. Founders also like to go here to share their opinions, or meaningful company updates.

Scraping Twitter wasn't that straightforward though. Unlike the Newsletter Generator - I had no template to work off of, so I need to make this automation entirely from scratch.

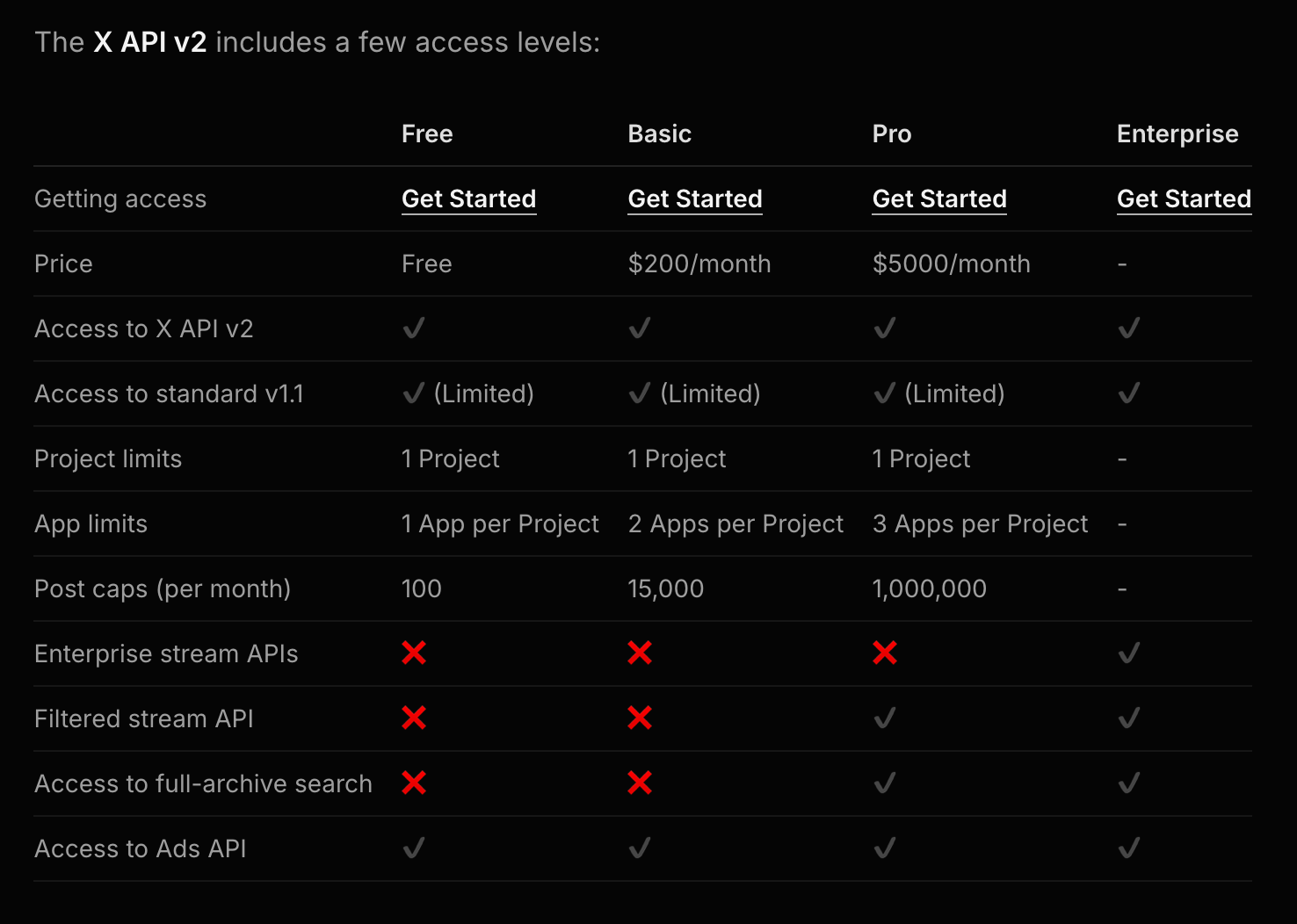

It took me a week from starting to build this automation to make it fully operational. Last Sunday (6/29) I to made a serious effort during a mini-hackathon with my friends. The biggest challenge was finding a reliable Twitter Scraper API that was not the official Twitter API. This was far too expensive for me:

I was actually able to connect to the creators of FeedRecap.com which is another kind of Twitter aggregator. That team used the Scraper Tech Twitter API. I tried using it but couldn't figure out the API. The documentation was confusing, and although I was finally able to scrape accounts - this Apify Twitter API was the best and easiest solution (despite some of its shortcomings).

The biggest problem I have experienced with the Apify Twitter Scraper so far is that it is unreliable - it does not always scrape the tweets when the automation is fired. Reliability is crucial for the automations I am building. Everything in these systems functions off of trust - if this doesn't work for one day, the quality of my Newsletter decreases considerably.

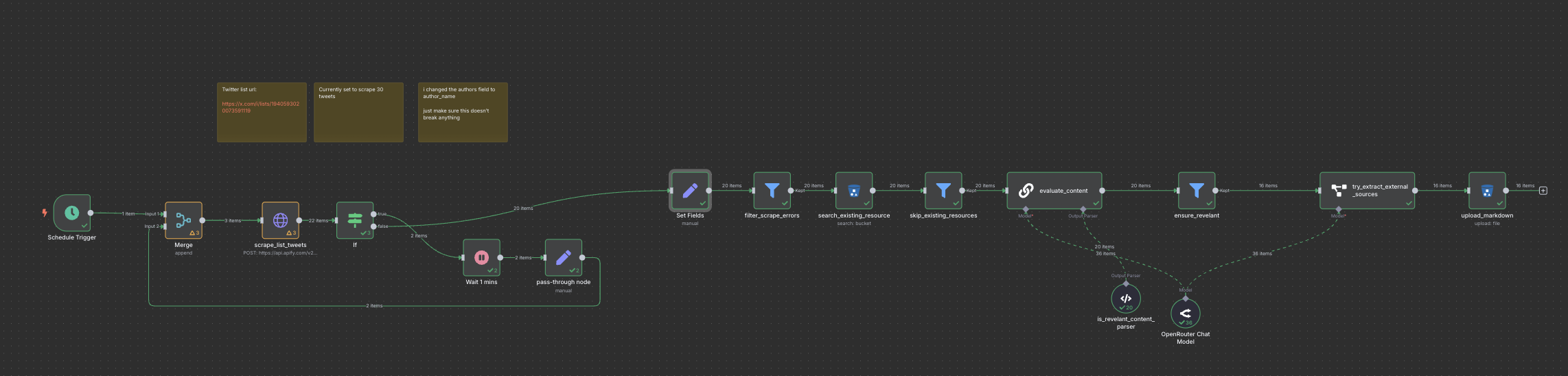

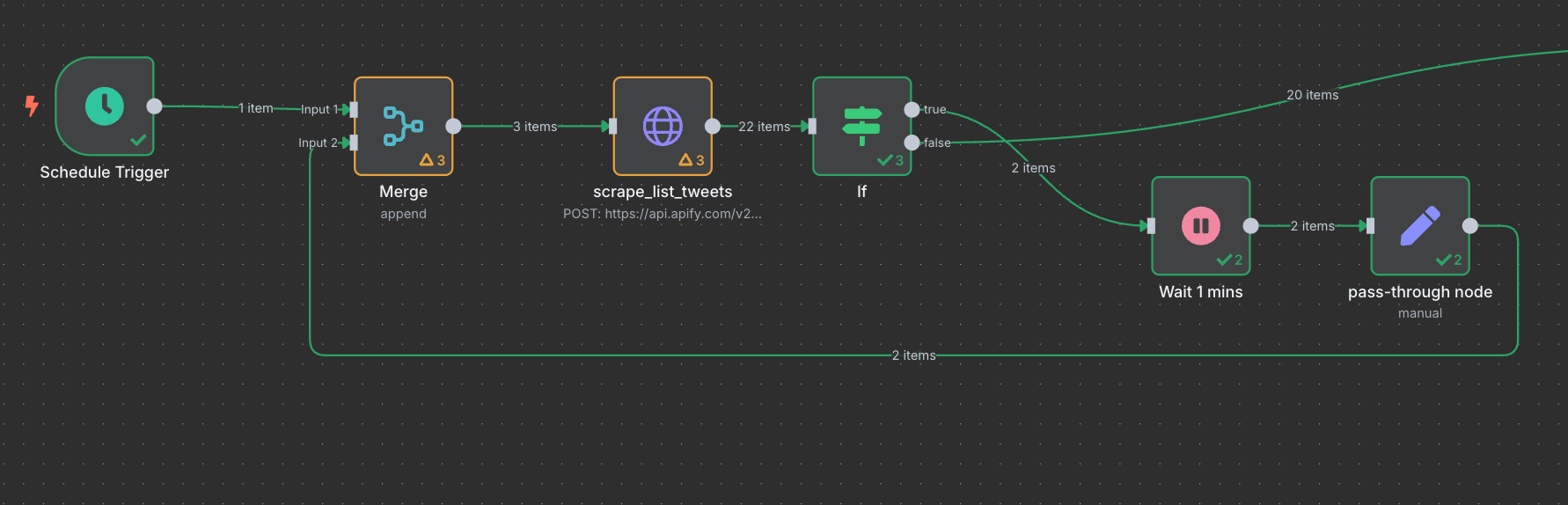

To solve this problem, I set up a for loop in n8n that loops 10 times or until the API fires successfully. I could probably crank that number higher - but haven't found the need yet.

Aside from that - this Twitter scraper is relatively inexpensive - although I was overcharged on one occasion which has not happened again - something to keep an eye on.

Now that the Twitter Scraper is set-up, the automation will be able to reference Tweets to write stories, or reference them when selecting articles. This is a huge breakthrough - it makes my content credible, timely and relevant.

It will be very relevant for the next evolution of my content machine as well.

- Newsletter Makeover

If you were here on day one, and then on day two - you may have noticed a few differences. The UI and color palette of my Newsletter changed completely. I don't have screenshots, but I discovered that on mobile - the landing page of my Newsletter did not feature a subscribe button.

If you know anything about growing a product or sales - you would know that the landing page should feature the action you want the user to take. In my case - subscribing.

I also reversed the color scheme and got rid of my banner image to reduce the distractions on the landing page.

Signal vs. Noise

What's next on the roadmap

The AI world moves at a relentless pace. Every week I see a new technology to try or learn how to use. I try to bucket headlines into "Signal" or "Noise"... I define them as:

Signal: Pay attention to this, it's relevant. This technology will help scale Spencer

Noise: Distractions... cool news, but not relevant to Spencer right now.

Signal:

- Cross-posting my Newsletter to X, Linked.In, Instagram, Tiktok, etc...

In a world where building products is easier than ever, solutions are not the moat - distribution and hype is.

I need people to know who I am, and what I can do. By automating my posting to other social media surfaces on a daily basis, I should be able to grow my following autonomously.

At the very least, I will create minor ripples, which is better than being silent.

- Automating replies in my emails and inboxes in those social medias

Once I start posting everyday, I expect people to start messaging me (shocker). I barely have the time to answer people as-is, but there are many tools I can build now that will help me scale up my response capabilities.

Learning how to automate will teach me the same lessons as automating customer-support and warm-responses.

Signal headlines:

Noise:

- Vibe-coding a product

As tempting as it is to build something and be creative right now, I need to finish the first version of my "content machine."

The good news is that I am quite close - I have relevant data sources as my inputs (Twitter, blogs) and they will improve as I find more sources, and better ways to persist the data.

I like to think that by the end of July - I should be able to start building a product, but that timeline may be off as well.

- Quitting

Quitting is also on the table. There were several moments where I doubted the feasibility of what I'm trying to pull-off.

Unfortunately, learning AI skills are too important now. Even if I wanted to quit (which I don't) - this stuff is pretty fun when I don't want to destroy my computer out of frustration.

The world is upside down: the biggest risk is not taking any, and the least risk is taking as many big shots as possible. With some luck, one of them should hit.

Noise headlines:

That wraps up this edition of "The Changelog" Thanks for tuning in - see you in a week? Maybe two?

Have a great week!